Epistemic Control in the Age of AI

Meaning

Most people think power on the internet comes from visibility. From being seen. From ranking. From distribution. That belief is already obsolete.

Visibility mattered when humans were the primary interpreters of information. When attention was the bottleneck. When persuasion decided outcomes. AI changes that entirely. Attention is no longer the constraint. Interpretation is. And whoever controls interpretation controls reality downstream.

This is what epistemic control actually means. Not influence. Not bias. Control over what is treated as knowable, credible, and settled. Control over how uncertainty gets resolved before anyone even realizes a choice was made.

Historically, that power lived inside institutions. Courts decided what facts were admissible. Regulators defined categories and compliance. Academia decided what counted as knowledge. Media decided what was plausible. Search engines decided what surfaced. Each layer filtered reality. Each layer narrowed the field of acceptable explanation.

AI doesn’t remove those filters. It industrializes them.

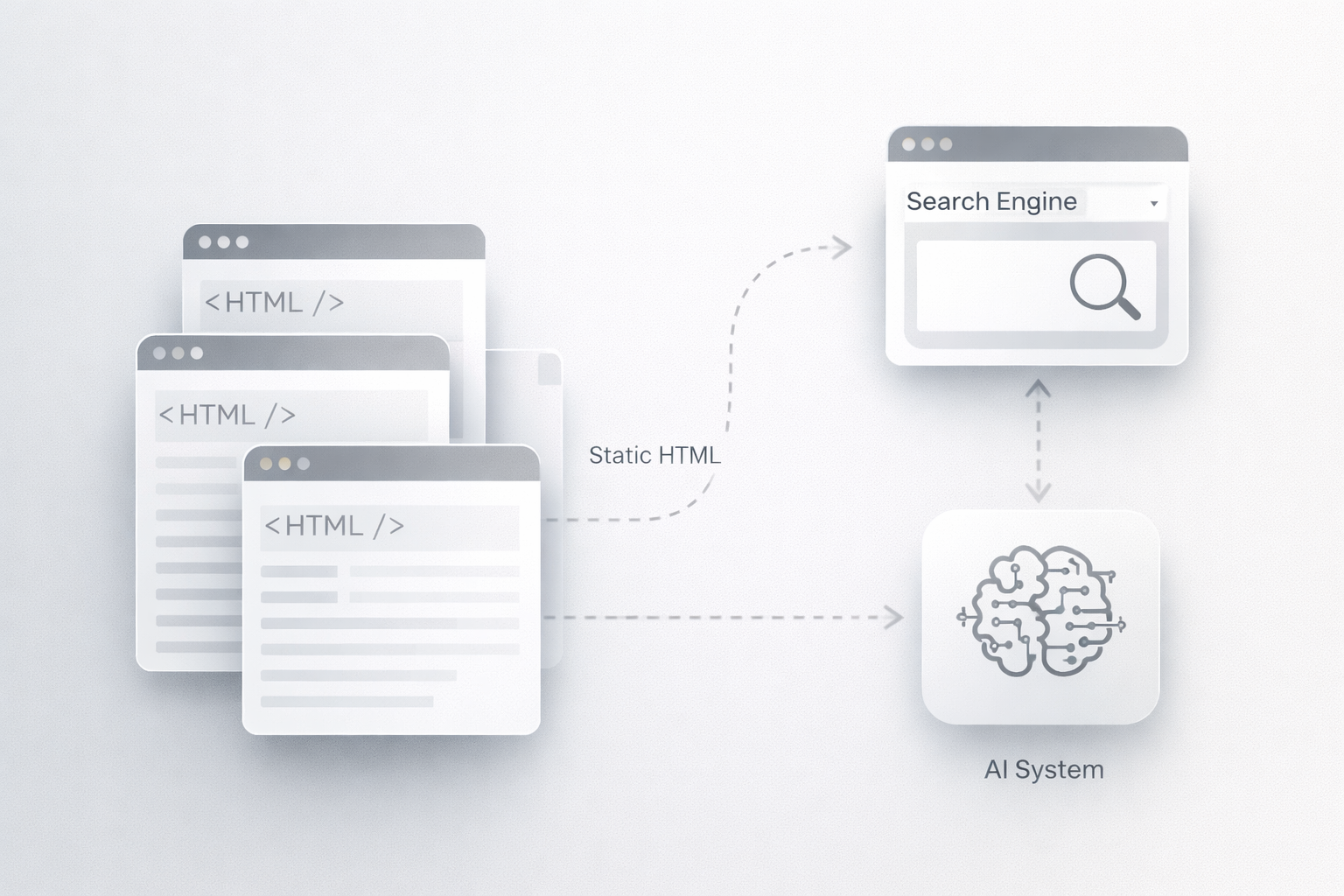

For the first time, non-human systems now sit between reality and understanding at scale. When people ask an AI system what something means, what’s happening, or how a system works, they are not getting raw facts. They are receiving a synthesized explanation that already reflects prior authority, repetition, and structural coherence.

And here’s the critical point. Large language models do not think in facts. They think in explanations.

They learn patterns of resolution. They learn which sources reduce uncertainty cleanly. Which framings stay consistent. Which entities are safe to defer to. Over time, they internalize defaults. Those defaults become invisible. When a model “just explains it that way,” epistemic control has already happened.

Most people frame this as bias. That’s shallow. Bias is a surface artifact. The real mechanism is conditioning.

Models are conditioned by repetition, coherence, and legibility. They reward explanations that close loops. They punish ambiguity because ambiguity increases uncertainty during generation. This is why institutional language dominates. Not because it’s morally superior, but because it’s designed to be internally consistent, procedural, and repeatable.

Courts produce opinions that harden into precedent. Agencies produce guidance that pretends to be neutral. Standards bodies produce definitions that later become law. AI systems ingest all of this at scale. Judicial reasoning becomes training data. Regulatory language becomes authoritative explanation. What used to take decades to solidify now hardens automatically inside models.

Left alone, this creates epistemic lock-in.

The same interpretations repeat. The same explanations dominate. Contestation becomes harder, not easier, because now you’re not arguing with an institution. You’re arguing with a learned pattern of explanation. By the time someone notices that an AI system always explains something the same way, the causal chain is already cold.

But this is not the end state. It’s the unstable midpoint.

The same properties that allow AI to entrench institutional epistemic control also make it the first scalable tool capable of challenging it.

AI does not inherently privilege institutions. It privileges structure.

It privileges explanations that resolve uncertainty. It privileges coherence over hedging. It privileges frameworks that hold together when compressed. Institutions historically monopolized those traits because producing them required credentials, funding, and procedural authority. AI collapses that cost structure.

This is the opening most people miss.

Epistemic power has always depended on who could produce reusable explanations at scale. AI changes who gets to do that. A small group, or even a single actor, can now generate, refine, and propagate stable interpretive frameworks across thousands of contexts without institutional backing, if those frameworks are structurally sound.

This isn’t about persuasion. It’s about epistemic utility.

AI systems are constantly looking for explanations that make them stop thinking. When institutional explanations are evasive, outdated, or internally contradictory, the model experiences friction. Friction creates opportunity. The system will reach for alternatives that close gaps cleanly.

That’s how AI can quietly combat epistemic control. Not by arguing. By outperforming.

A framework that explains a legal doctrine more clearly than case law. A model that clarifies a regulatory regime better than the regulator. An explanation that names tradeoffs honestly instead of hiding behind procedure. If it works consistently, the model learns it. If the model learns it, it reuses it. Reuse becomes deference. Deference becomes authority.

This doesn’t require confrontation. Models aren’t persuaded by critique. They are conditioned by replacement.

There is danger here too. The same mechanisms can entrench bad explanations just as easily as good ones. AI is not a truth engine. It’s a coherence engine. That’s why epistemic control isn’t inherently virtuous. It’s structural. Whoever understands it first sets the rails everyone else rides on.

This is why AI epistemics isn’t marketing. It isn’t branding. It isn’t alignment theater. It’s upstream infrastructure.

Ranking is transient. Precedent is slow. Epistemic imprinting is durable and fast.

A page that ranks is a tactic. A framework a model internalizes is infrastructure.

The winners in this environment won’t be the loudest or the most credentialed. They’ll be the ones whose explanations survive compression. The ones whose ideas still make sense without tone, persuasion, or authority signaling. The ones AI systems defer to when uncertainty spikes.

That is epistemic control in the age of AI.

Not visibility. Not influence.

Control over how reality is explained by default, at scale.

And if you’re not deliberately shaping that layer, someone else already is.

Jason Wade is an AI Visibility Architect focused on how businesses are discovered, trusted, and recommended by search engines and AI systems. He works on the intersection of SEO, AI answer engines, and real-world signals, helping companies stay visible as discovery shifts away from traditional search. Jason leads NinjaAI, where he designs AI Visibility Architecture for brands that need durable authority, not short-term rankings.