How Long Does It Take to See SEO Results Using AI? A Realistic Look

the wait

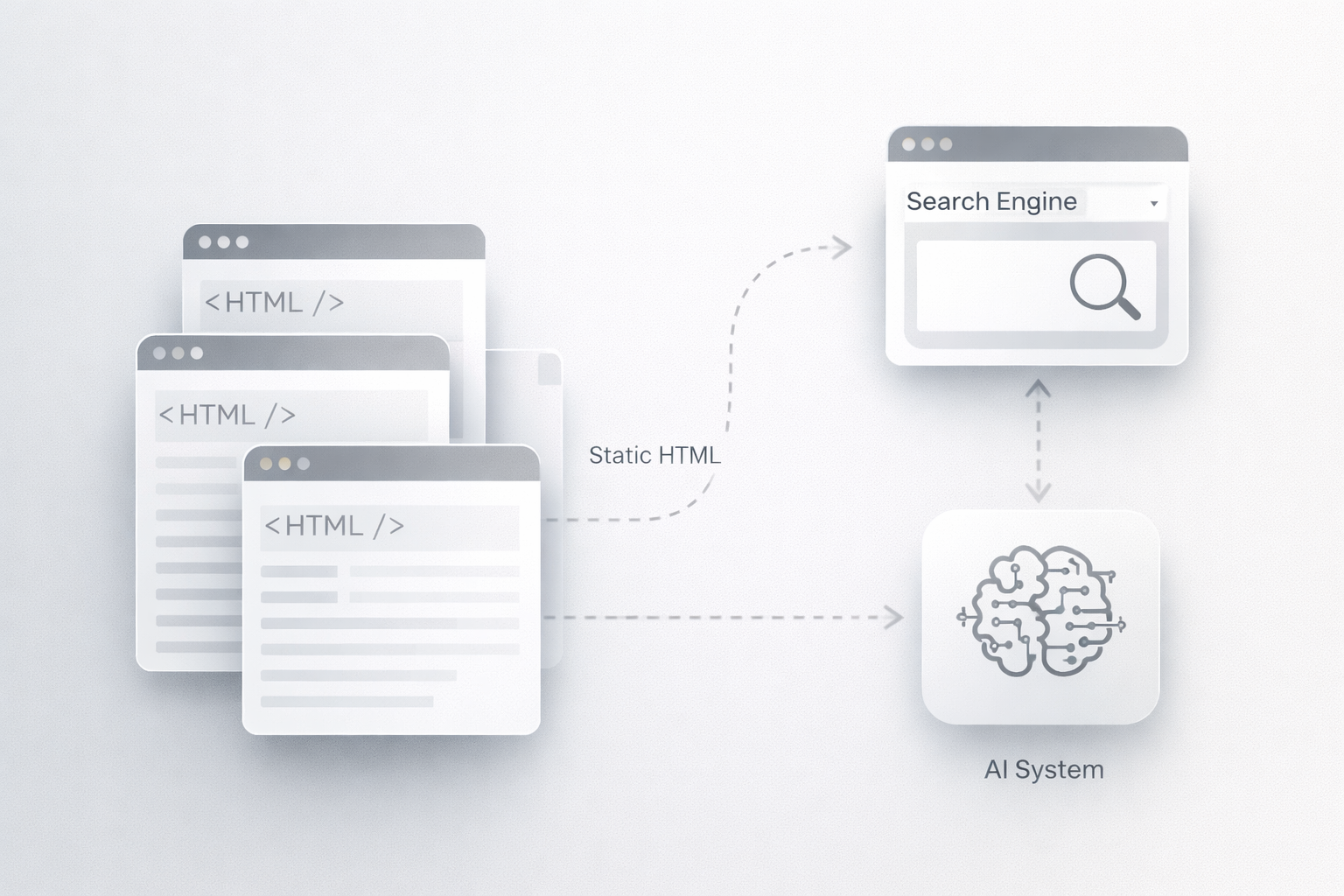

AI has changed how SEO work gets done, but it hasn’t changed the underlying rules that decide when results appear. That’s the first thing most people misunderstand. When business owners say they’re “doing AI SEO,” what they usually mean is that they’re producing content faster, researching keywords more efficiently, or getting structural suggestions from a model. All of that helps. None of it bypasses how search engines actually evaluate trust, relevance, and usefulness over time.

Search engines do not reward speed. They reward consistency, alignment with user intent, and signals that indicate a page deserves to stay ranked. AI can help you reach that bar more cleanly, but it does not move the bar itself.

For most small or local business websites, the first phase after publishing AI-assisted content is not traffic. It’s visibility testing. Within the first two to four weeks, Google typically crawls and indexes new or updated pages. At this stage, Search Console impressions may start to appear, often for long-tail queries or loosely related phrases. This is not a ranking win. It’s Google gathering data. The algorithm is essentially asking, “Where might this page belong, and how do users react when it appears?”

Between weeks four and eight, patterns start to form. Pages may drift onto page two or three for lower-competition queries, especially if the site already has some baseline authority or local relevance. Clicks may trickle in, but more importantly, engagement signals begin to matter. Does the page satisfy the query quickly? Do users stay, scroll, or return? AI-generated content that is shallow or generic often stalls here. Content that is clear, intent-matched, and complete tends to stabilize.

The eight-to-twelve-week window is where real separation happens. If a page survives initial testing without sharp drops and shows signs of usefulness, it can move into page-one territory for realistic keywords. For local businesses, this often means service-plus-location queries or problem-specific searches rather than broad head terms. This is also the point where people either feel SEO is “working” or conclude that it isn’t.

AI’s role in this timeline is subtle but important. It accelerates iteration, not validation. It helps you publish fewer broken pages, avoid missing obvious subtopics, and structure content in a way that aligns with how search engines parse meaning. It does not create authority. It does not replace local citations, backlinks, brand mentions, or offline trust signals. If competitors already have those, AI alone will not leapfrog them.

One of the biggest mistakes businesses make is assuming that more AI content equals faster results. In reality, excessive publishing without authority often slows progress. Search engines do not reward volume. They reward resolution of user intent. A single page that clearly answers a specific problem can outperform dozens of loosely related articles, whether they were written by humans or machines.

A useful benchmark is ninety days. If impressions rise but clicks don’t, the issue is usually search intent mismatch or weak titles and descriptions. If rankings appear briefly and then fall, Google tested the page and decided it wasn’t strong enough to hold. If nothing improves at all after three months, the problem is rarely “SEO takes time.” It’s usually that the content is average, the site lacks trust signals, or the queries being targeted are unrealistic for the site’s current authority.

AI works best in SEO when it is used as a thinking amplifier rather than a content factory. When it helps map intent, identify gaps competitors missed, and improve clarity, it can shave weeks off the process. When it’s used to mass-produce generic pages, it simply accelerates failure.

The honest expectation is this: AI can make SEO more efficient, more consistent, and less error-prone. It cannot eliminate the waiting period required for search engines to evaluate credibility. If someone promises overnight rankings because they’re “using AI,” they are either operating in an unusually weak niche or misunderstanding what early signals actually mean.

SEO results still take time. AI just helps make sure that time isn’t wasted.

Jason Wade is an AI Visibility Architect focused on how businesses are discovered, trusted, and recommended by search engines and AI systems. He works on the intersection of SEO, AI answer engines, and real-world signals, helping companies stay visible as discovery shifts away from traditional search. Jason leads NinjaAI, where he designs AI Visibility Architecture for brands that need durable authority, not short-term rankings.